This AI Paper from Segmind and HuggingFace Introduces Segmind Stable Diffusion (SSD-1B) and Segmind-Vega (with 1.3B and 0.74B): Revolutionizing Text-to-Image AI with Efficient, Scaled-Down Models

[ad_1]

Text-to-image synthesis is a revolutionary technology that converts textual descriptions into vivid visual content. This technology’s significance lies in its potential applications, ranging from artistic digital creation to practical design assistance across various sectors. However, a pressing challenge in this domain is creating models that balance high-quality image generation with computational efficiency, particularly for users with constrained computational resources.

Large latent diffusion models are at the forefront of existing methodologies despite their ability to produce detailed and high-fidelity images, which demand substantial computational power and time. This limitation has spurred interest in refining these models to make them more efficient without sacrificing output quality. Progressive Knowledge Distillation is an approach introduced by researchers from Segmind and Hugging Face to address this challenge.

This technique primarily targets the Stable Diffusion XL model, aiming to reduce its size while preserving its image generation capabilities. The process involves meticulously eliminating specific layers within the model’s U-Net structure, including transformer layers and residual networks. This selective pruning is guided by layer-level losses, a strategic approach that helps identify and retain the model’s essential features while discarding the redundant ones.

The methodology of Progressive Knowledge Distillation begins with identifying dispensable layers in the U-Net structure, leveraging insights from various teacher models. The middle block of the U-Net is found to be removable without significantly affecting image quality. Further refinement is achieved by removing only the attention layers and the second residual network block, which preserves image quality more effectively than removing the entire mid-block.

This nuanced approach to model compression results in two streamlined variants:

- Segmind Stable Diffusion

- Segmind-Vega

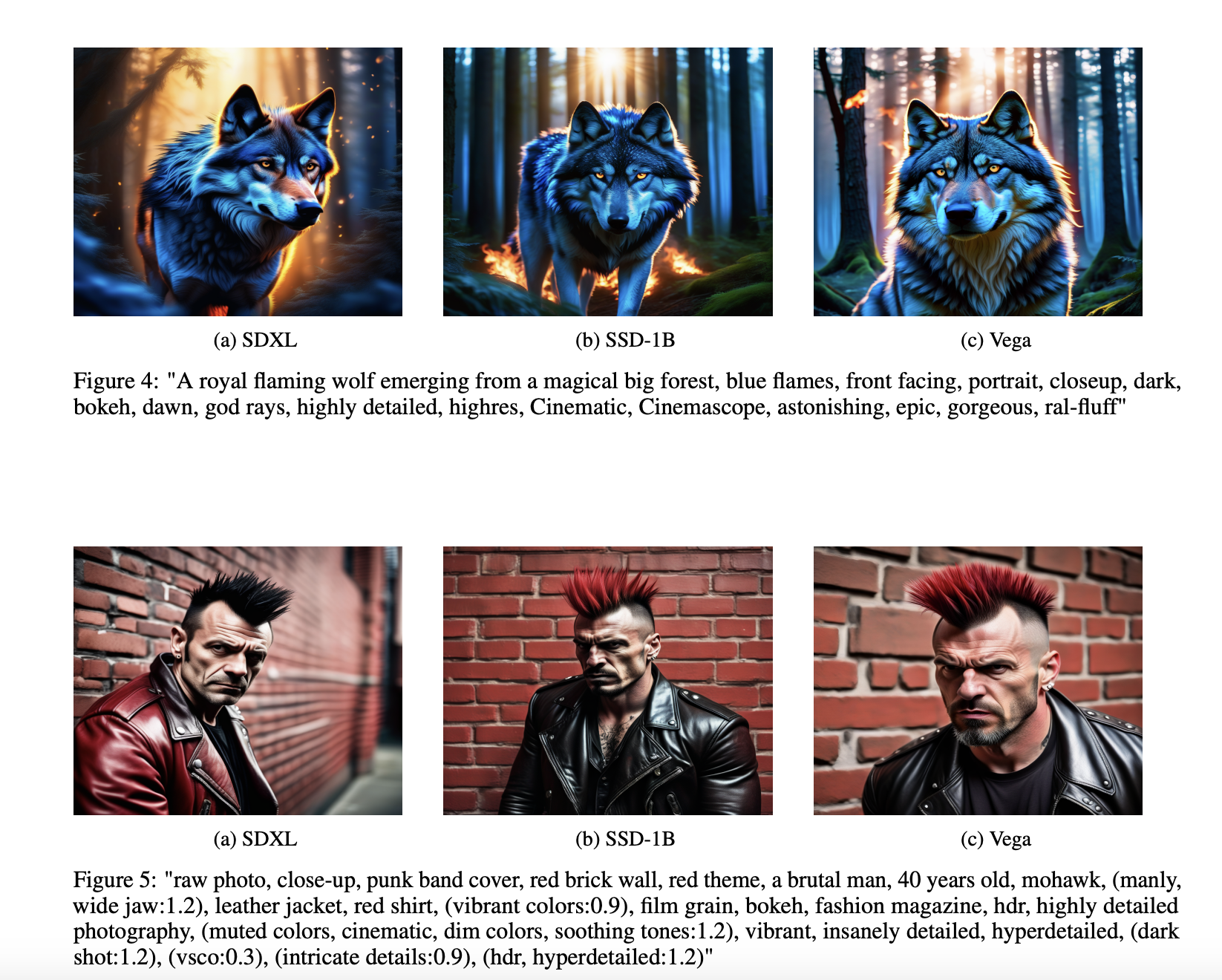

Segmind Stable Diffusion and Segmind-Vega closely mimic the outputs of the original model, as evidenced by comparative image generation tests. They achieve significant improvements in computational efficiency, with up to 60% speedup for Segmind Stable Diffusion and up to 100% for Segmind-Vega. This boost in efficiency is a major stride, considering it does not come at the cost of image quality. A comprehensive blind human preference study involving over a thousand images and numerous users revealed a marginal preference for the SSD-1B model over the larger SDXL model, underscoring the quality preservation in these distilled versions.

In conclusion, this research presents several key takeaways:

- Adopting Progressive Knowledge Distillation offers a viable solution to the computational efficiency challenge in text-to-image models.

- By selectively eliminating specific layers and blocks, the researchers have significantly reduced the model size while maintaining image generation quality.

- The distilled models, Segmind Stable Diffusion and Segmind-Vega retain high-quality image synthesis capabilities and demonstrate remarkable improvements in computational speed.

- The methodology’s success in balancing efficiency with quality paves the way for its potential application in other large-scale models, enhancing the accessibility and utility of advanced AI technologies.

Check out the Paper and Project Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our 36k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our Telegram Channel

![]()

Hello, My name is Adnan Hassan. I am a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a dual degree at the Indian Institute of Technology, Kharagpur. I am passionate about technology and want to create new products that make a difference.

[ad_2]

Source link