TikTok Researchers Introduce ‘Depth Anything’: A Highly Practical Solution for Robust Monocular Depth Estimation

[ad_1]

Foundational models are large deep-learning neural networks that are used as a starting point to develop effective ML models. They rely on large-scale training data and exhibit exceptional zero/few-shot performance in numerous tasks, making them invaluable in the field of natural language processing and computer vision. Foundational models are also used in Monocular Depth Estimation (MDE), i.e., estimating depth from a single image, and are widely used in autonomous vehicles, robotics, and virtual reality. However, as building datasets with millions of depth labels is challenging, MDE has not been explored to the fullest, and the associated MDE models show poor performance in some scenarios.

To address the abovementioned issue, the authors of this research paper from The University of Hong Kong, TikTok, Zhejiang Lab, and Zhejiang University have developed a foundational model for MDE that can produce high-quality depth information from images. Traditional depth datasets are created from depth sensors, stereo matching, or SfM, which is time-consuming and costly. On the contrary, in this work, the researchers have focused on large-scale unlabeled data that are simple and cheap to acquire, diverse, and easy to annotate.

Their work utilizes labeled and unlabeled data for better depth estimation, with the main focus on the latter. The researchers collected 1.5 Million labeled images from 6 public datasets, and for the unlabeled ones, they designed a depth engine that automatically generates depth annotations for unlabeled images. They used the collected labeled images to train an initial MDE model, which subsequently annotated the unlabeled ones, creating a self-learning pipeline.

In the joint learning phase, the model is challenged with a tougher optimization target for additional knowledge. Additionally, the researchers also proposed leveraging rich semantic priors from pre-trained encoders instead of using an auxiliary semantic segmentation task for better scene understanding.

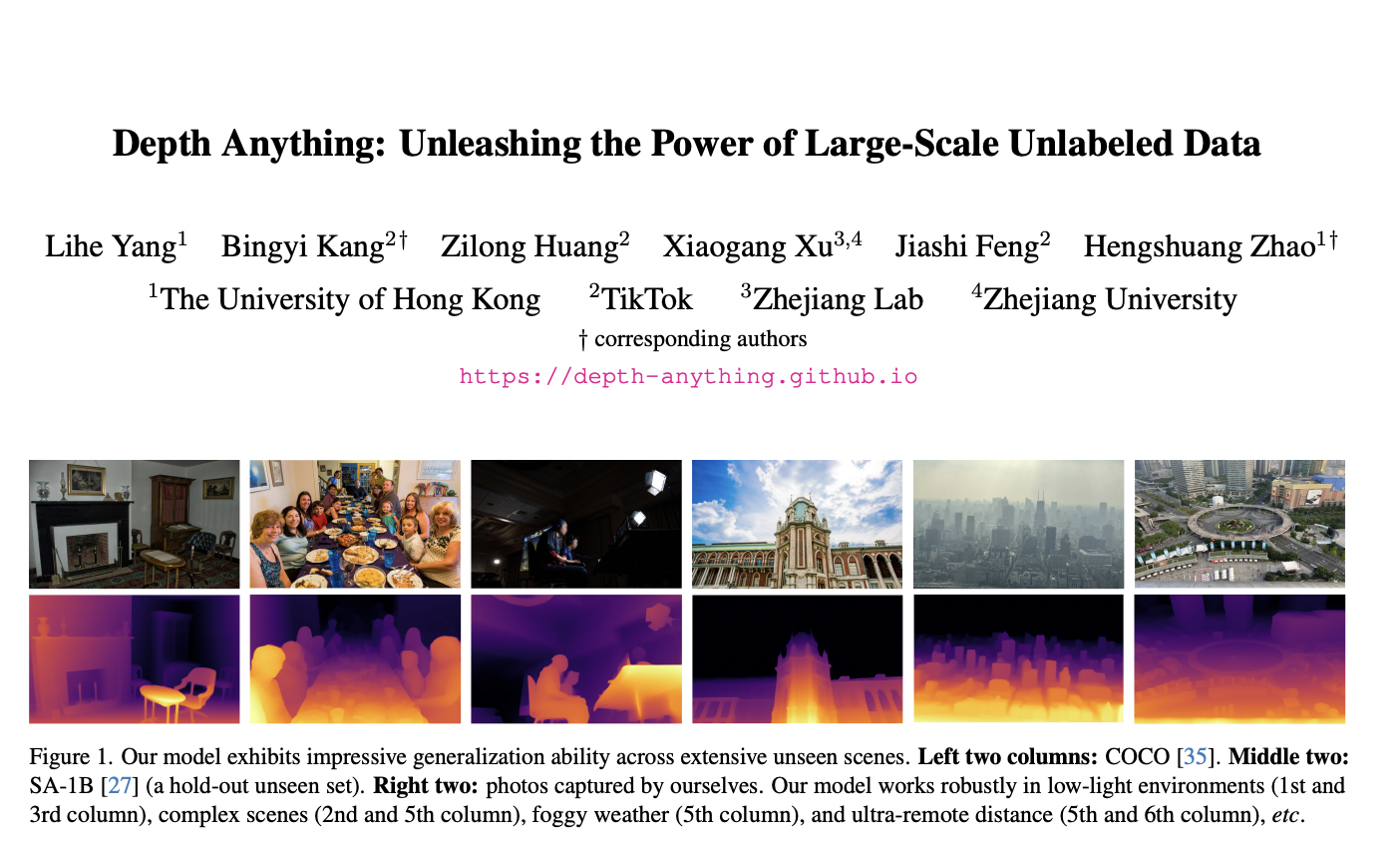

For evaluation, the researchers compared their model’s zero-shot depth estimation capabilities on six unseen datasets against the best model from the latest MiDaS v3.1. The results show that Depth Anything outperforms the MiDaS model significantly across extensive scenes and on several unseen datasets. Moreover, the model also leads to a better metric depth estimation than the ZoeDepth based on MiDaS. Additionally, on evaluating the semantic segmentation, the researchers observe that Depth Anything gives superior results on MDE and semantic segmentation tasks and has the potential to be used as a generic multi-task encoder for middle-level and high-level visual perception systems.

In conclusion, Depth Anything is an effective solution to robust MDE as it primarily focuses on cheap and diverse unlabeled images. For better results, the researchers have made the optimization target when learning unlabeled images more challenging and have preserved rich semantic priors from pre-trained models. This leads to much better performance and zero-shot estimation capabilities. Moreover, the model is able to surpass the latest MiDaS mode, highlighting its potential to be used in downstream depth estimation tasks.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and Google News. Join our 36k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our Telegram Channel

![]()

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.

[ad_2]

Source link